Global Site

Displaying present location in the site.

NEC's Initiatives on AI Governance toward Respecting Human Rights

Vol.17 No.2 June 2024 Special Issue on Revolutionizing Business Practices with Generative AI — Advancing the Societal Adoption of AI with the Support of Generative AI TechnologiesNEC has formulated the “NEC Group AI and Human Rights Principles” to ensure that its business activities related to AI utilization respect human rights, preparing internal systems and rules as well as talent development and others for the implementation of AI governance. In addition, NEC strengthens its ability to respond to new challenges arising from AI utilization by running the Digital Trust Advisory Council, which is composed of a variety of external experts. In this paper, we will introduce NEC’s initiatives on AI governance in AI businesses, including biometric authentication.

1. Introduction

In recent years, advancements in AI technology have led to the creation of new services and innovations. For example, AI is used in various fields such as call center support, dynamic pricing, optimization of search engines, high-speed trading, and more. In addition, biometric authentication is used in government agencies, airports, public facilities, and entertainment facilities for access control, identity verification, hospitality, and other purposes, becoming increasingly ubiquitous in our daily lives. The implementation of AI in society and the utilization of data, including biometric information (hereinafter referred to as “AI utilization”), have the potential to enrich people’s lives.

However, the misuse of technology can lead to human rights issues such as the invasion of privacy as well as discrimination, posing significant inconvenience to consumers. For example, discrimination based on gender or race in loan assessments or hiring processes; unauthorized tracking of behavior, interests, or preferences without the consent of the individual; and misuse of surveillance by governments can potentially lead to the invasion of privacy and freedom. If such incidents occur, it may not only result in the termination of the service in question but also damage the reputation of the service provider and increase concerns about new technologies such as AI in general.

Therefore, companies involved in new technologies such as AI must implement appropriate governance for their development and use. If, by promoting AI governance, these companies can contribute to preventing both reputational risks after services have been provided and opportunities losses due to excessive risk aversion and they can receive external feedback on their initiatives to improving AI governance, this will not only enhance the corporate value of individual companies but also contribute to the enjoyment of AI’s value and reduce risks for users across society.

NEC believes that through AI utilization, NEC can provide values such as safety, security, fairness, and efficiency. Therefore, NEC implements initiatives focused on AI governance to ensure that business activities related to AI utilization respect human rights. Starting from section 2, we specifically introduce NEC’s initiatives on AI governance.

2. Implementation Framework for AI Governance

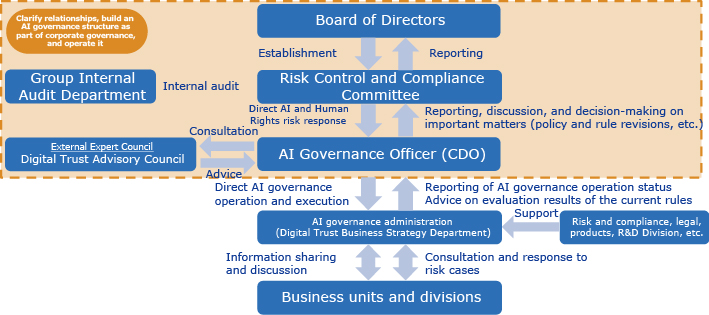

At NEC, in 2018, an organization called the Digital Trust Business Strategy Division (now the Digital Trust Business Strategy Department) was established to create and promote companywide strategies to incorporate the notion of respect for human rights into business operations in relation to AI utilization1). In 2019, NEC formulated the “NEC Group AI and Human Rights Principles” (hereinafter referred to as the Companywide principles)2). In addition, as part of NEC’s corporate governance, NEC has built an AI governance structure, appointed the Chief Digital Officer (CDO) as the AI Governance Officer, clarified relationships with the Risk Control and Compliance Committee and the Board of Directors. NEC has also established the Digital Trust Advisory Council, an External Expert Council, and is actively collaborating with external parties to address AI governance as part of its management agenda (Fig. 1).

3. NEC Group AI and Human Rights Principles

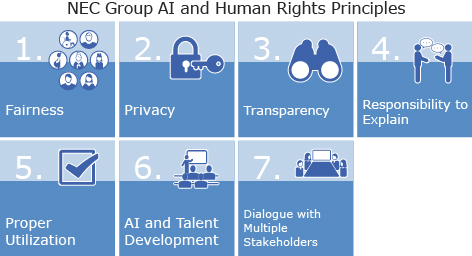

The Companywide principles were developed by the Digital Trust Business Strategy Department based on domestic and international principles as well as the company’s vision, values, and business activities. The principles were formulated in April 2019, after engaging in dialogues with various internal and external stakeholders, including relevant departments within the company such as the research and development, sustainability, risk management, marketing, business divisions, and external experts, NPOs, and consumers. The Companywide principles have been formulated to guide our employees to recognize respect for privacy and human rights as the highest priority in our business operations in relation to social implementation of AI utilization. As depicted in Fig. 2, the Principles focus on seven main points: Fairness, Privacy, Transparency, Responsibility to Explain, Proper Utilization, AI and Talent Development, and Dialogue with Multiple Stakeholders.

4. Initiatives toward AI Governance Implementation

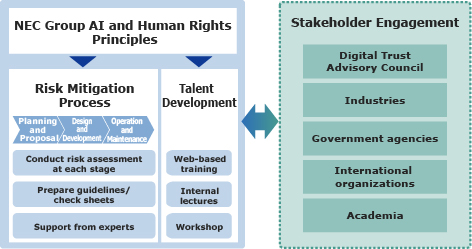

To implement the Companywide principles, the Digital Trust Business Strategy Department takes the lead in preparing internal systems and conducting employee training among others. Next, we introduce initiatives to identify and address risks through the risk mitigation process, improve employee literacy through talent development, and incorporate diverse opinions from external and internal entities through stakeholder engagement (Fig. 3).

4.1 Risk mitigation process

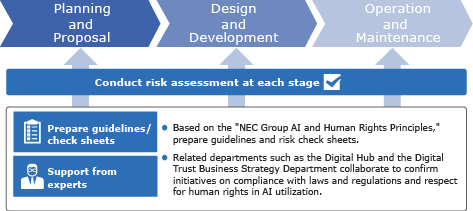

We have established a framework for conducting risk checks and for carrying out measures for AI utilization at each phase starting with the planning and proposal phase. We did this by preparing companywide rules that specify the governance structure and fundamental items to be complied with, guidelines and manuals that specify response items and operation flows, and risk check sheets (Fig. 4). In conducting risk checks and carrying out measures, the Digital Trust Business Strategy Department and related departments collaborate to confirm and implement measures to ensure that the initiatives comply with laws and regulations and respect human rights. Furthermore, we use NEC’s expertise and knowledge to provide design samples and support the publication of usage goals, aiming to enable customers and partners to appropriately utilize AI.

4.2 Talent development

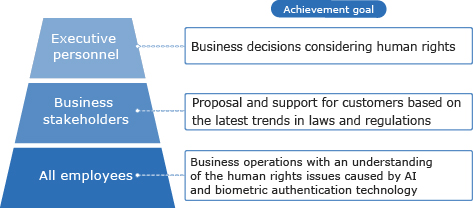

Based on the Companywide principles, we set goals and provide training for officers and employees of NEC as well as its domestic and overseas affiliated companies in accordance with their positions to ensure that they can take appropriate actions to respect human rights in business activities (Fig. 5). Web-based training for all employees covers topics such as AI technology and the importance of AI ethics, trends in relevant laws and regulations, considerations for human rights and privacy related to AI utilization, the Companywide principles, and operations. In addition, external experts are invited as lecturers, and lectures including discussions on the latest market trends and case studies are given for AI business stakeholders and management to promote understanding.

4.3 Stakeholder engagement

To respond to social trends, such as laws, regulations, and social acceptance, we collaborate with a variety of stakeholders. The Digital Trust Advisory Council includes external experts with specialized knowledge regarding legal systems, human rights and privacy, and ethics, including lawyers, legal scholars, stakeholders of NPOs in the fields of sustainability, human rights, etc., and consumer group representatives and acts as an advisory body to the AI governance officer. Through this council, we incorporate diverse opinions from external experts so that we can strengthen our ability to address new challenges associated with AI utilization. In July 2023, we convened a meeting with the theme of generative AI to solicit opinions on what NEC, in the roles of both a user and a platform provider of generative AI, should do and how NEC can contribute to the social implementation of generative AI. The opinions obtained will be reflected in future initiatives.

Furthermore, we are actively collaborating with various stakeholders in Japan and abroad in industry, government agencies, international organizations, and academia, with a view to building a framework for an AI society.

5. Agile Operation

These AI governance initiatives are implemented in accordance with domestic and international laws and guidelines. Also, following the concept of agile governance outlined in the “Governance Guidelines for Implementation of AI Principles,” published by Japan’s Ministry of Economy, Trade and Industry in July 2021, we flexibly adapt to changes in the social environment and accordingly review internal rules and operation. In 2023, we established a policy to actively utilize generative AI (large language models: LLM) in internal operations, research and development, and business activities. We promote proactive and responsible utilization through measures such as the development of guidelines and rules in accordance with the Companywide principles and related internal rules, the proper use through continuous analysis and evaluation of changes in social conditions, the implementation of internal training to improve employee literacy, and the establishment of internal dedicated help desk by experts.

6. Conclusion

NEC will continue to work on the provision and utilization of AI with priority given to respecting human rights in alignment with NEC’s Purpose of creating the social values of safety, security, fairness and efficiency to promote a more sustainable world where everyone has the chance to reach their full potential.

References

Authors’ Profiles

Assistant Manager

Digital Trust Business Strategy Department

Director

Digital Trust Business Strategy Department

General Manager

Digital Trust Business Strategy Department

PDF

PDF Ministry of Economy, Trade and Industry: Governance Guidelines for Implementation of AI Principles Ver. 1.1

Ministry of Economy, Trade and Industry: Governance Guidelines for Implementation of AI Principles Ver. 1.1