Global Site

Displaying present location in the site.

Visual Inspection Solutions Based on the Application of Deep Learning to Image Processing Controllers

Vol. 14 No. 1, January 2020, Special Issue on AI and Social Value CreationThe use of artificial intelligence (AI) in product inspection applications is becoming increasingly common. This paper examines a joint effort between NEC and Nippon Electro-Sensory Devices (NED) — a manufacturer specializing in line sensor systems — to create a defective product detection system that incorporates NEC’s RAPID machine learning in image processing software used in image inspection systems. In addition to providing customer value which makes it easy to build an image inspection system incorporating machine learning, we have incorporated other cutting-edge NEC technology into NED’s products with a view to deploying these solutions in new fields and promoting digitalization in manufacturing.

1. Introduction

Japan’s high-quality manufacturing is supported by a stringent quality assurance regime in which every aspect of each product is visually inspected by experienced inspectors. Maintaining such a rigorous human-dependent quality assurance system is proving difficult with the accelerating retirement of experienced inspectors and the need for improved staffing efficiency. To deal with these issues, many manufacturers are turning to AI technology — mainly deep learning — for a solution.

One of the most critical drawbacks of previously available product inspection solutions was that image capture and image processing or analysis were only provided as separate solutions. Until now, no single solution has been capable of handling both image capture and analysis simultaneously, which meant that processing was required for each process and additional processing to link the results. By integrating the image analysis version of NEC’s Advanced Analytics-RAPID Machine Learning deep learning software with TechView, — a proprietary product owned by Nippon Electro-Sensory Devices (NED), a leader in image capture and analysis systems — we have created a solution that effectively unifies image capture and analysis in a single, powerful system that offers incredible flexibility and can be quickly and easily tailored to suit the requirements of a wide range of product inspection applications.

TechView enables applications to be built easily, making it possible to work in conjunction with various devices, while RAPID Machine Learning can learn from images, allowing more sophisticated and flexible visual inspection judgment criteria to be developed than is possible using conventional rules-based image analysis technology. Seamless integration of these two systems makes it easy to build inspection systems that use both machine vision and deep learning. At the same time, the high-quality teaching images required for RAPID Machine Learning can be collected automatically when the image processing library of TechView is utilized. Moreover, because systems can be built on-site, users who do not wish to store inspection data at an external location can be accommodated.

In this paper, we describe TechView and its image capturing capability in section 2. In section 3, we will provide an overview of RAPID Machine Learning, and in section 4, we will show how the two products work together and discuss a use case. We conclude with a look at future prospects.

2. TechView and Image Capturing System

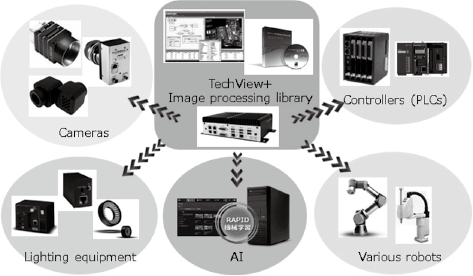

Developed by NED, TechView is a hardware-integrated controller that incorporates an image processing library. It can be connected to various devices and tools required for image processing and is used in many image processing-based visual inspection systems.

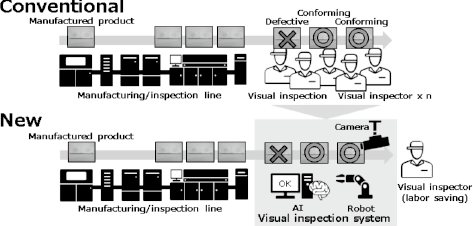

A visual inspection system makes it possible to automate the visual inspection procedure independent of human involvement, minimizing or eliminating the need for human labor, and ensuring uniform, error-free quality standards (Fig. 1). However, a couple of significant issues are involved when conventional visual inspection system is introduced. One is that it is difficult to allocate resources to program development when constructing a multi-part system connecting various devices and controllers which perform a series of processes utilizing AI — from camera and light control to image capture, image processing, image analysis, and results display (Fig. 2). The other is that it is difficult to capture images that accurately depict the characteristics of inspected objects. Below, we explain how these problems can be solved.

2.1 Features of TechView

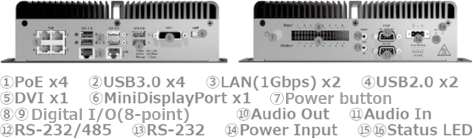

An external view of TechView is shown in Fig. 3. The unit incorporates a wide selection of interfaces.

The two most important features of TechView are that it does not require programming skills and that it can be connected to various devices including cameras, lighting equipment, robots, and controllers, as well as AI. Because no programming skills are necessary, image processing design can easily be done using flowcharts rather than requiring customized development. Thanks to the incorporation of an image processing library, a broad range of processing capabilities are possible, such as pattern matching and image processing (binarization, cutout, brightness control, etc.) (Fig. 4).

Together these features make it possible to use TechView to create the system flow shown in Fig. 2 without having to develop customized programs.

2.2 Image capturing system

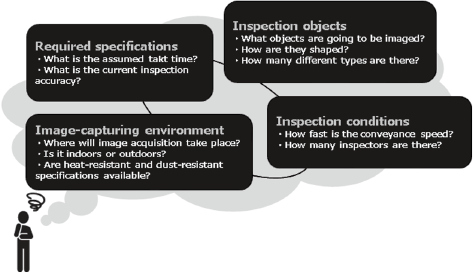

When capturing images of inspection objects, it is important to consider how to configure the cameras and lighting equipment so that the images accurately capture the characteristics of defects (Fig. 5).

For example, the most important requirement for a line scan camera used for seamlessly shooting a series of continuously moving inspection objects is that the camera’s resolution is good enough to accurately capture the characteristics of any product defects. To decide on an ideal camera, it is important to take account of the width direction (X-axis) and movement direction (Y-axis) of the object being inspected. The width direction can be calculated from the field of view which includes the object, while the movement direction can be calculated from the conveyance speed of the object and minimum scanning frequency of the camera1).

NED has specialized in industrial line scan cameras for more than four decades, providing an ideal foundation for the construction of image capturing systems in cooperation with NEC. Optimized to acquire images that maximize the visibility of defects, these systems not only incorporate line scan cameras, but also area cameras and infrared cameras.

3. Image Analysis Version of RAPID Machine Learning

RAPID Machine Learning is an easy-to-use software application featuring an intuitive GUI that enables even non-expert users to rapidly develop image recognition applications that use deep learning. It has been successfully used to automate visual inspection at numerous sites, helping reduce the need for human labor in quality assurance processes where visual inspection is used.

When introducing deep learning to visual inspection systems, two main issues need to be addressed. The first issue is that assembly lines with high yield rates cannot provide the images of defective products needed to enable deep learning to learn the characteristics of defective and normal products. The second is that deep learning generally does not provide any reasons or basis for its judgment, making it difficult for users to come up with policies to improve recognition accuracy. In the following, we will introduce the technologies that we believe will solve these issues.

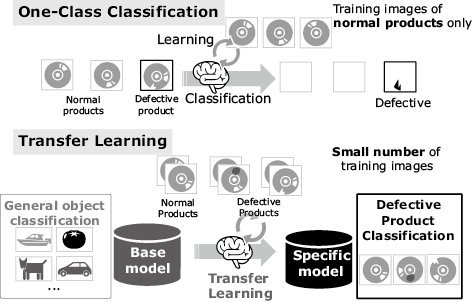

3.1 One-class classification

To solve the problem of how to extract the characteristics of defective products when only normal products are available, one-class classification uses images of normal products to quantify the difference with defective products (Fig. 6, top). Until now this type of classification had difficulty coping in cases where the common characteristics of normal products were extremely complex, resulting in “over-detection” — in which a normal product would be misclassfied as defective. This technology effectively suppresses over-detection even in cases where surface patterns of normal products are random.

This technology is so effective that a model can be created without requiring any image of a defective product.

3.2 Transfer learning

Another way of dealing with the first issue is transfer learning, a technology that achieves high-precision image recognition. Just a few teaching images are required (Fig. 6, bottom) as this technology lets you use an existing model that can classify general objects. Simply teach the system a small amount of additional data about a problem to be solved (such as scratch detection on a metallic part) and you can create a new model specifically for that problem.

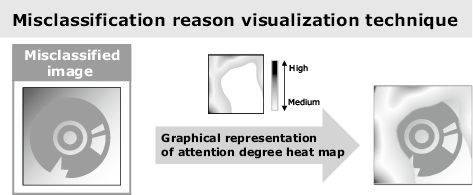

3.3 Misclassification reason visualization technique

To help users understand the reasons behind a judgment made by deep learning, the misclassification reason visualization technique visualizes the foundations for the judgement (Fig. 7). We are currently evaluating this exclusive cutting-edge technology to determine whether to incorporate it in the RAPID Machine Learning software. To determine how a model misclassified an image, this technology generates a heat map that shows how much attention the model pays to various parts of the product image. You can use this data to refine your model. For example, if you know that the model was paying attention to the background, you can theorize that accuracy may be increased if teaching images with more variable backgrounds are used.

4. Combination of TechView and RAPID Machine Learning

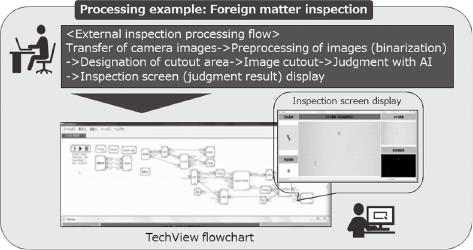

In the TechView flowchart, judgment processing can be performed using RAPID Machine Learning. For inspection items where external inspection is traditionally based on human sensory perceptions other than sight and conventional image processing is ineffective, the RAPID Machine Learning’s advanced AI algorithms — such as one-class classification can easily be utilized.

Fig. 8 shows a flowchart for an inspection checking for the presence of foreign matter. By creating a flowchart that prompts judgement using the AI model which has finished learning in advance only when the judgment is difficult with conventional image processing, you will be able to efficiently execute judgment processing. You will also be able to make required settings in the flowchart. As long as a simplified inspection image display is adequate, you can build an external inspection system that exploits AI without developing a new program.

In cases where our conventional system has already been introduced to plant lines, the combination of TechView and RAPID Machine Learning makes it possible to perform high-precision, high-speed judgment processing without having to upgrade the hardware specifications.

5. Future Prospects

The automation of the inspection processes discussed in this paper is positioned as one of the most important constituents in the flow of digitalization through the entire factory. The value offered by the automation of inspection processes using TechView and RAPID Machine Learning not only reduces the numbers of inspectors and processes, but also helps assure reliable, uniform inspection quality not dependent on the degree of human skill or experience. When the inspection results are combined with the traceability of components used in the manufacturing process, it is also possible to promptly specify which process was running when the problem occurred2). Moreover, when the correlation between the inspection result and facility and operation data is analyzed, it is also expected to contribute to yield rate improvement and cost reduction.

Improvement in throughput and enhancement of quality control will become ever more important in manufacturing in the future. This can only be achieved by the digitalization of entire supply chains. NEC is proposing NEC DX Factory, a future concept for manufacturing destined to be changed by digital transformation (Photo). By providing the benefits of digitalization to humans, things, and facilities and returning simulation results in all the processes from designing and manufacturing to shipping and distribution, we will foster innovative new manufacturing systems where autonomous robots and production facilities will operate in cooperation with humans. Our advanced technology will enable us to develop powerful high-value solutions to address a broad range of different customer issues and support digitalization of manufacturing plants.

References

- 1)IMAI Shinji: Line scan camera, How to choose a Industrial camera and how to use, Image Lab/Separate, April 2018 (Japanese)

- 2)

Authors’ Profiles

Manager

AI Platform Division

AI Analytics Division

Manager

Smart Industry Division

Smart Industry Division

Assistant Manager

Embedded Business Sales Division

Manager

Platform Solution Division